Why LLMs Are Bad at 'First Try' and Great at Verification

I used to spend hours crafting the perfect prompt. Detailed instructions, examples, constraints—the works.

And the AI would still add random features I never asked for. Or refactor code that was perfectly fine. Or skip steps it decided were "unnecessary."

Eventually it clicked: I was fighting a losing battle. So I stopped trying to control generation and started focusing on verification.

The Failure Patterns You've Probably Seen

Before diving into why, these are the common anti-patterns:

- The Giant Prompt Syndrome: Cramming requirements, design, implementation, and improvement into a single prompt

- Overconfidence in Abstract Instructions: Expecting "think carefully" or "be thorough" to actually improve quality

- The Invisible Loop: Thinking you're iterating when you're actually spinning in circles within the same biased context

- Context Bloat: Adding "just in case" information until the actually important instructions get buried

If any of these sound familiar, you're in the right place.

The Core Insight

The claim is simple:

LLMs perform better at "verify and improve existing artifacts" than at "controlled first-time generation."

Instead of trying to get the perfect output on the first attempt, you get better results by:

- Having the LLM produce something first

- Then having it verify and improve that output

This is grounded in how LLMs actually process information.

Why Verification Works Better

At first, I assumed better prompts would lead to better first-shot output. But after enough failures, the pattern became clear: there are three interconnected reasons why LLMs become "smarter" when they have something to work with.

1. External Feedback Changes the Task

When an artifact exists, the task fundamentally transforms:

- Without artifact: "Generate something good" (vague, open-ended)

- With artifact: "Identify what's wrong with this and fix it" (specific, bounded)

The second task has clearer success criteria. The LLM isn't guessing what "good" means—it can evaluate concrete issues against concrete output.

2. Position Bias (Lost in the Middle)

Research has shown that LLMs exhibit a U-shaped attention pattern: they prioritize information at the beginning and end of their context window, while information in the middle tends to get overlooked.

When you feed an artifact as input to a new session, it naturally occupies a prominent position in the context. The LLM is literally forced to pay attention to it.

This also explains why that really important instruction you buried in paragraph 5 of your mega-prompt keeps getting ignored.

3. Task Clarity Drives Performance

"Improve this code" is a more concrete task than "write good code."

The presence of an artifact provides:

- A specific target for evaluation

- Clear boundaries for the scope of work

- Implicit success criteria (this one matters more than you'd think—"better than before" is much easier to verify than "good enough")

The Externality Spectrum

What made the biggest difference for me was reviewing in a completely separate context. Once I stopped letting the generator review its own work, the blind spots became obvious.

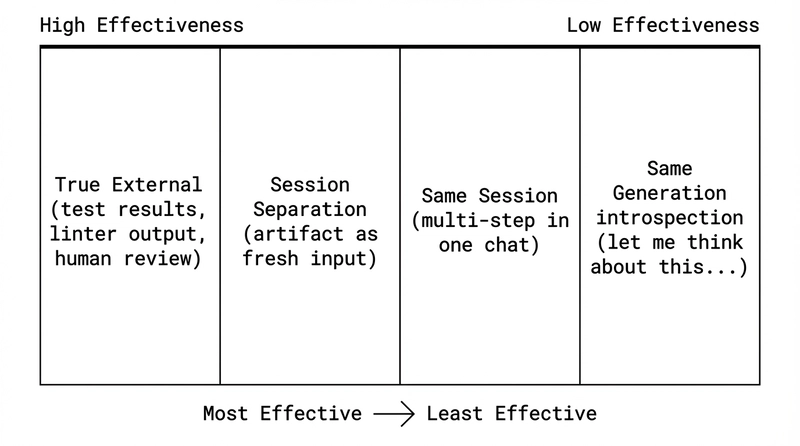

Not all feedback loops are created equal. Different approaches rank very differently in effectiveness:

The thing is, looping within the same session is fundamentally internal feedback. The LLM is still operating within its original generation biases. Only by separating context do you get true "external" perspective.

In short: if the context doesn't change, neither does the model's perspective.

Practical Implications

So what do you actually do with this?

1. Artifact-First Workflow

Stop trying to get everything right in one shot. Instead:

- Generate Phase: Get something out, even if imperfect. Don't over-specify.

- External Feedback: Run the code, execute tests, use linters

- Verification Phase (new session): Feed the artifact + feedback to a fresh context

[Generation Session]

│

├── Input: Requirements, constraints

├── Output: Artifact (code, design, etc.) + brief intent summary (1-3 lines)

│

▼

[External Feedback]

│

├── Code execution

├── Test execution

├── Linter/static analysis

│

▼

[Verification Session] ← Fresh context

│

├── Input: Artifact + intent summary + feedback results

├── Output: Improved artifact

│

▼

[Repeat or Complete]

2. Know When to Separate Sessions

Session separation isn't always necessary. Use your judgment:

| Task Type | Approach |

|---|---|

| Small, localized fixes (typos, formatting) | Same session is fine |

| Clear error fixes (with stack trace) | Same session works—external feedback (error log) exists |

| Design changes, architecture revisions | Separate sessions |

| Quality improvements, refactoring | Separate sessions |

| Direction changes, requirement pivots | Separate sessions |

Rule of thumb: If you're feeling "something isn't working," that's often a sign to start a fresh session. Your intuition about context pollution is usually right.

3. What to Pass Between Sessions

Not everything from the generation phase should go to the verification phase.

| Content | Should Pass? | Why |

|---|---|---|

| Full Chain-of-Thought log | No | Verbose, becomes noise. Important info gets lost (position bias) |

| Intent summary (1-3 lines) | Yes | Preserves the "why" compactly |

| Final decision + rationale | Yes | Useful for debugging |

| Rejected alternatives | Maybe | Only when specifically relevant |

The principle: Pass the "why," not the "how I thought about it."

Designing Your AGENTS.md

You don't need to redesign your AGENTS.md all at once. But understanding position bias changes how you think about what goes in it.

This insight has direct implications for how you structure AGENTS.md (or whatever root instruction file you use—CLAUDE.md, cursorrules, etc.).

The Position Bias Problem

Context Window Position → Attention Weight

[AGENTS.md] ← Start: HIGH attention

↓

[Middle instructions] ← Middle: LOW attention (Lost in the Middle)

↓

[User prompt] ← End: HIGH attention

If your AGENTS.md is bloated, the truly important principles get diluted. Adding more "just in case" actually makes everything weaker.

Design Principles

| What | Approach |

|---|---|

| AGENTS.md | Core principles only. ~100 lines. What must be followed on every task |

| Task-specific info | Inject via skills, command arguments, or reference files when needed |

| Why separate? | Context separation lets you compose optimal information for each task |

What Belongs in AGENTS.md

- Project purpose and domain

- Non-negotiable constraints (security, naming conventions)

- Tech stack overview

- Communication style

- Error handling behavior

What Doesn't Belong

- Individual feature specs

- API details

- Task-specific workflows

- Long code examples

- "Nice to have" information

Test: Ask "Is this needed for every task?" If no, it belongs elsewhere.

The Human Role

In Agentic Coding, you're not "using an LLM"—you're designing a system where an LLM operates.

Your Responsibilities

| Responsibility | Concrete Actions |

|---|---|

| Design external feedback | Decide which tests to run, which linters to use, what "success" means |

| Determine session boundaries | Judge when to cut context, what carries over |

| Define quality gates | Separate automated checks from human review needs |

| Maintain AGENTS.md | Keep core principles tight, prevent bloat |

| Articulate intent | Create or validate the "intent summary" that passes between sessions |

Automation vs. Human Review

Good for automation:

- Code execution, test execution

- Linters, formatters

- Type checking

- Security scans

- Applying formulaic fixes

Requires human review:

- Design decision validity

- Requirement alignment

- Session boundary judgment

- Trade-off decisions

- Validating the "why"

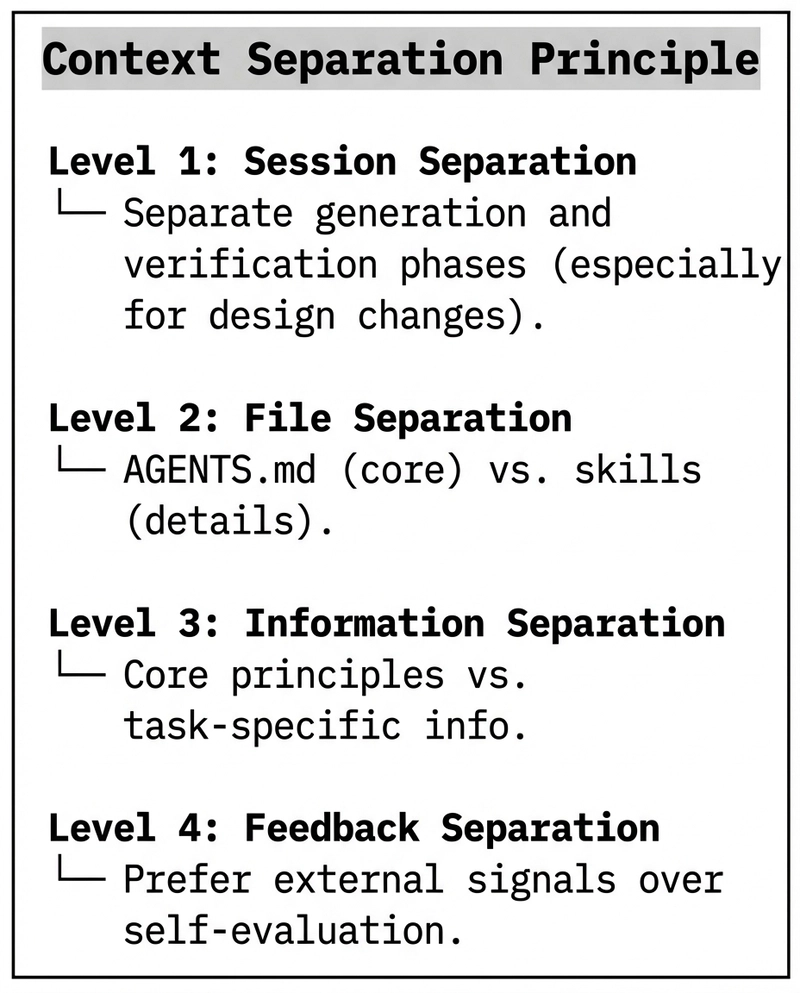

A Framework: Context Separation at Every Level

It took me a while to realize this wasn't about writing better prompts—it was about where I drew the boundaries.

You don't need to apply all of this rigidly. But when something feels off, one of these levels is usually the culprit:

The Research Behind This

These aren't just opinions—they're grounded in LLM research:

Self-Refine (Madaan et al., 2023) The generate → feedback → refine loop shows approximately 20% improvement over single-shot generation. Key insight: the improvement comes from the structured iteration, not from the model "trying harder."

Lost in the Middle (Liu et al., 2023) LLMs show U-shaped attention bias, heavily weighting the beginning and end of context while underweighting the middle. This explains why your carefully crafted instructions in paragraph 5 keep getting ignored.

LLMs Cannot Self-Correct Reasoning Yet (Huang et al., 2023) Without external feedback, self-correction doesn't work—and can actually make things worse. "Review your work" as an instruction has minimal effect; external signals (test failures, linter errors) are what drive actual improvement.

Key Takeaways

- Don't optimize for first-shot perfection. Get something out, then improve it.

- Session separation is real. The same context that generated the artifact will struggle to objectively improve it.

- External feedback is non-negotiable. Tests, linters, execution results—these are what drive quality, not "think harder" prompts.

- Keep AGENTS.md lean. Position bias means bloat actively hurts. If it's not needed for every task, move it out.

- Pass intent, not process. Between sessions, transfer the "why" in 1-3 lines, not the full thought log.

- You're a system designer. Your job isn't to use the LLM—it's to design the workflow, feedback loops, and context boundaries that let it perform.

What's Next

This article focused on why verification-oriented workflows outperform first-shot generation. One place this breaks down in practice is when we try to encode everything into AGENTS.md, assuming the model will follow it correctly. I'll cover:

- How to structure work plans that turn execution into verification

- Where to put rules so they actually get followed (hint: not all in AGENTS.md)

If you've been struggling with inconsistent LLM output or finding that your detailed prompts underperform simpler ones, try restructuring around verification. The difference is often dramatic.

What's your experience been? Did switching to a verification-first approach change anything for you?

References

- Madaan, A., et al. (2023). "Self-Refine: Iterative Refinement with Self-Feedback." arXiv:2303.17651

- Liu, N. F., et al. (2023). "Lost in the Middle: How Language Models Use Long Contexts." arXiv:2307.03172

- Huang, J., et al. (2023). "Large Language Models Cannot Self-Correct Reasoning Yet." ICLR 2024. arXiv:2310.01798

- Hsieh, C.-Y., et al. (2024). "Found in the Middle: Calibrating Positional Attention Bias Improves Long Context Utilization." ACL 2024 Findings. arXiv:2406.16008